EM-Fusion: Dynamic Object-Level SLAM With Probabilistic Data Association

Abstract

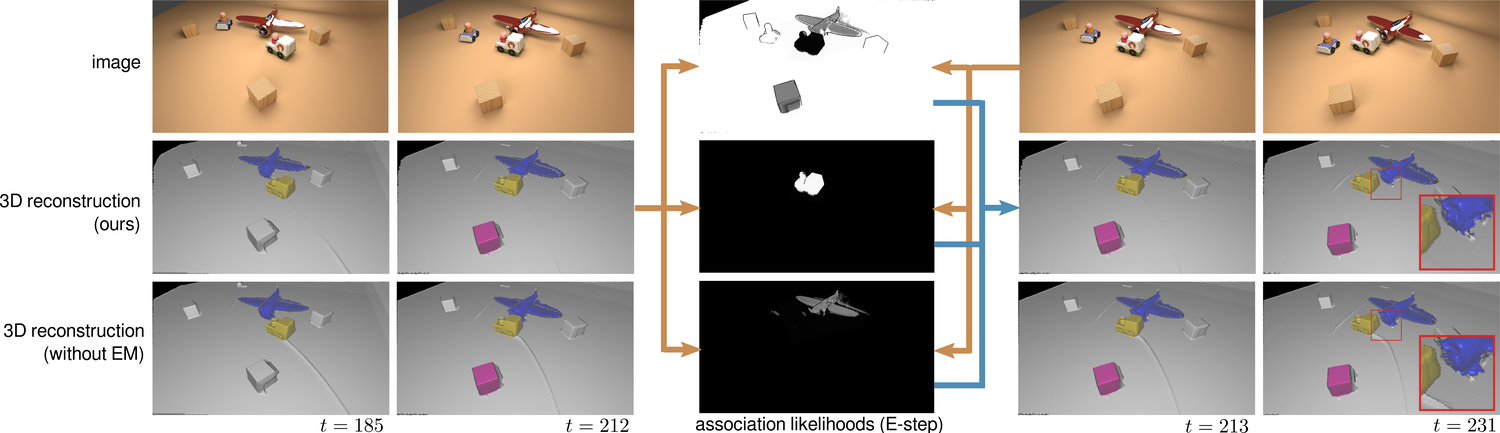

The majority of approaches for acquiring dense 3D environment maps with RGB-D cameras assumes static environments or rejects moving objects as outliers. The representation and tracking of moving objects, however, has significant potential for applications in robotics or augmented reality. In this paper, we propose a novel approach to dynamic SLAM with dense object-level representations. We represent rigid objects in local volumetric signed distance function (SDF) maps, and formulate multi-object tracking as direct alignment of RGB-D images with the SDF representations. Our main novelty is a probabilistic formulation which naturally leads to strategies for data association and occlusion handling. We analyze our approach in experiments and demonstrate that our approach compares favorably with the state-of-the-art methods in terms of robustness and accuracy.

Referencing EM-Fusion

@InProceedings{strecke2019_emfusion,

title = {{EM}-Fusion: Dynamic Object-Level SLAM With Probabilistic Data Association},

author = {Strecke, Michael and St{\"u}ckler, J{\"o}rg},

booktitle = {Proceedings IEEE/CVF International Conference on Computer Vision 2019 (ICCV)},

pages = {5864--5873},

publisher = {IEEE},

month = oct,

year = {2019},

doi = {10.1109/ICCV.2019.00596},

month_numeric = {10}

}